As engineers, we measure everything. The performance of database queries, the response time of APIs, network latency, memory usage, CPU utilization, etc...

We conduct these benchmarks, gather numbers, and subsequently make changes that affect millions of end-users and thousands of servers. But, the uncomfortable truth is that most of us are operating in a fog when it comes to understanding those numbers properly.

You've likely observed this scenario before: Two engineers benchmark the same system and get different results. One claims the new caching layer increased response time by 15%.The other disagrees and says it worsened performance. Both have numbers, both have confidence, and both could be wrong.

What is missing? A good understanding of probability and statistics as they relate to real-world engineering systems.

Why Probabilistic Thinking Matters in System Benchmarks

In the real world, systems are noisy and non-deterministic. Your web server has variability in the way it responds, at times taking a little over the 150ms you expect. Factors like CPU load, network traffic, and background processes can cause small performance variations. That’s just how real systems behave and there's always some level of variability and randomness involved.

Instead, these systems exhibit:

- Natural variability: Background processes, garbage collection, thermal throttling

- Environmental noise: Network congestion, disk I/O contention, CPU scheduling

- Measurement uncertainty: Timer resolution, system call overhead, instrumentation impact

The point of the story is, without statistical tools to handle this variability, we will make bad engineering decisions based on poor or misinterpreted data.

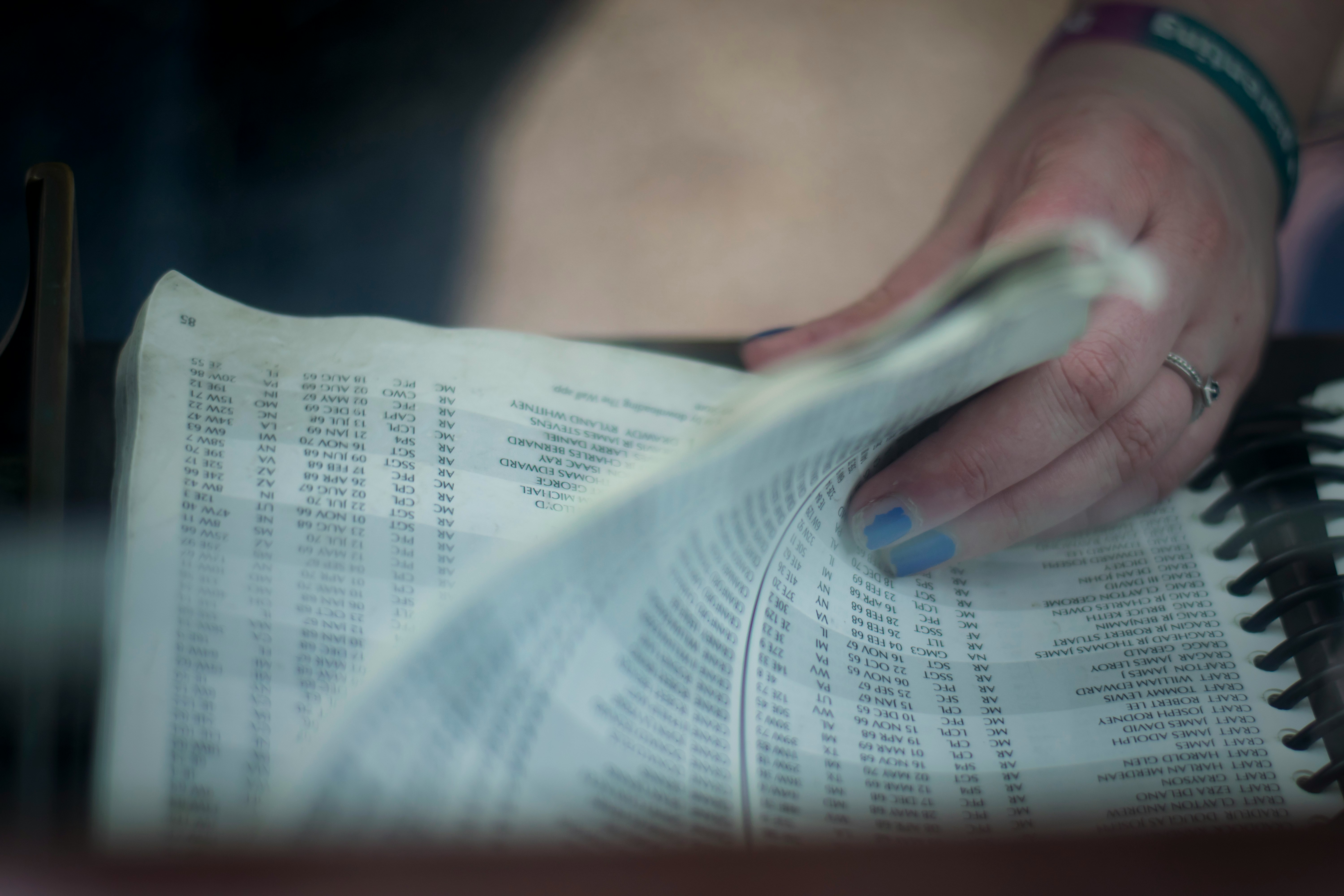

Here's a real example: An engineer benchmarks two API endpoints and gets these results:

Endpoint A: 145ms, 152ms, 148ms, 151ms, 149ms

Endpoint B: 147ms, 153ms, 146ms, 150ms, 154ms

Quick glance: Endpoint A looks faster (average 149ms vs 150ms). But is this difference meaningful, or just noise? Without proper statistical analysis, you can't tell.

This is where probability and statistics become essential engineering tools, not academic luxuries.

Core Statistical Concepts Every Engineer Should Know

Descriptive Statistics: Your First Line of Defense

When you collect benchmark data, descriptive statistics help you understand what you're actually looking at.

Mean (Average): The sum divided by count. Useful but dangerous when used alone.

Median: The middle value when sorted. More robust against outliers.

Standard Deviation: Measures how spread out your data is. Low std dev means consistent performance; high std dev indicates variability.

Percentiles (P50, P95, P99): The values below which a certain percentage of observations fall.

Why percentiles matter: In production systems, you care more about "What's the worst experience 5% of users will have?" (P95) than "What's the average experience?" Users don't experience averages.

Confidence Intervals: Expressing Uncertainty Like an Engineer

A confidence interval provides a range of plausible values for your measurement, considering uncertainty. Instead of saying "Response time is 150ms," you say "Response time is 150ms ± 15ms (95% confidence)."

An intuitive way to think about it is this: if you repeated your experiment many times, and each time calculated a 95% confidence interval, then about 95% of those intervals would contain the true (but unknown) mean response time.

Practical usage: When comparing two systems, overlapping confidence intervals suggest the difference might not be meaningful. Non-overlapping intervals indicate a likely real difference.

Statistical Laws That Save You From Bad Decisions

Law of Large Numbers: Why Sample Size Matters

The Law of Large Numbers states that as you collect more samples, your measured average gets closer to the true average of the system.

Where are independent, identically distributed random variables with expected value .

Engineering implication: Running your benchmark 3 times isn't enough. Neither is 10. You need enough samples for the noise to average out.

Rule of thumb: For stable systems, collecting 30 to 50 samples is usually enough to get a reliable average. If your system is noisy or you're trying to detect small performance differences, you may need hundreds of samples to get meaningful results.

Interactive Demonstration: Law of Large Numbers

See how sample size affects the reliability of your benchmark results:

Central Limit Theorem: Why Averages Work

The Central Limit Theorem explains why averaging makes sense, even when your underlying data isn't normally distributed.

Where is the sample mean, is the population mean, is the standard deviation, and is the standard normal distribution.

The theorem: When you take many samples and compute their average, those averages will be normally distributed around the true mean, regardless of the original distribution's shape.

Engineering implication: This justifies using confidence intervals and statistical tests based on normal distributions, even when individual response times follow other patterns.

Why you never connected this to engineering work: While most engineering programs teach statistics as the study of abstract mathematical ideas (such as hypothesis testing, normal distributed models, confidence intervals, etc...) , they rarely teach the application of statistical concepts to actual real-world systems. Maybe you learned t-tests in your probability class (but nobody ever told you how to apply them to query performance comparisons). You memorized the Central Limit Theorem for your exams, but no one explained how it validates your benchmarking approach.

Common Benchmarking Pitfalls and How to Avoid Them

Pitfall 1: The Single Run Trap

The problem: One measurement tells you almost nothing about system performance. You've captured a single point in a noisy, time-varying system.

The fix: Always run multiple iterations and report distributions.

Pitfall 2: The Mean-Only Mindset

The problem: Reporting only averages hides crucial information about system behavior.

Consider these two systems:

- System A: Response times consistently 100ms ± 5ms

- System B: Response times average 100ms, but range from 50ms to 500ms

Same average, completely different user experience.

The fix: Always report percentiles alongside means.

Pitfall 3: Flaky Test Syndrome

The problem: Your benchmark results vary wildly between runs, making comparisons impossible.

Common causes:

- Insufficient warm-up period

- Background processes interfering

- Inconsistent load conditions

- Measurement overhead

The fix: Control your environment and establish baseline stability.

Practical Guidelines for Robust Benchmarks

1. Planning Your Benchmark

Before writing any code, ask yourself:

- What exactly am I measuring? (Latency? Throughput? Resource usage?)

- What factors might affect the results? (CPU load, memory pressure, network conditions)

- How precise do I need to be? (Is a 5% difference meaningful for this system?)

- What's my baseline? (Current system performance under identical conditions)

2. Environment Control

3. Comparing Two Systems Rigorously

When you need to determine if System B is actually better than System A:

Real-World Examples

Example 1: Database Query Performance

You're optimizing a database query and want to measure the impact of adding an index.

Example 2: HTTP API Latency Analysis

You're comparing two API implementations to decide which to deploy.

Example 3: Load Testing and Capacity Planning

You need to determine how many concurrent users your system can handle.

Interpreting Distributions Over Time

When monitoring production systems, you need to understand how performance metrics evolve. Here's how to track and interpret trends:

Building Your Statistical Toolkit

As an engineer, you don't need to become a statistician, but having the right tools makes all the difference. Here's a practical toolkit:

Essential Python Libraries

Ready-to-Use Functions

Conclusion: Making Statistics Work for You

Statistics isn't merely a theoretical construct that resides in the ivory tower of academia. Real Statistics endures the test of evaluation and helps make more informed decisions based on the systems you build and operate.

Sample size is very important. The Law of Large Numbers is not an academic theory; it is the reason we need a large number of samples in order to trust our benchmarks. The larger the sample size, the more reliable the results are.

Just because you only look at averages doesn’t mean everything else isn’t available to see, understand, and use as well. I'm talking about the whole distribution, particularly slow experiences captured by high percentiles like P95 and P99 that matter most to users.

Lastly, maintain consistency across your testing environment. If you cannot replicate your benchmark environment, you won't learn anything.

Points to remember

-

Before you run any benchmark, ask yourself if you have enough samples, if you’re looking at the right metrics, if you can measure uncertainty, and whether the differences you see actually matter. These tools won’t speed up your systems by themselves, but they’ll help you know if your work is making a real difference and that kind of clarity is priceless.

-

Before you benchmark anything, consider if you have sufficient samples, if you are drawing the right contextual metrics, if you can quantify uncertainty, and if the differences you observe are consequential. These types of tools will not make your systems faster, but they can tell you if your action is positively impacting system performance, and that kind of knowledge is valuable!